Running SSIS packages continuously without scheduling

No more Batch ETL

A few weeks ago I wrote a post about the concept of having continuous execution of ETL individual processes to achieve ‘eventual consistency‘. In that post I made the case to step away from ‘Batch’ execution of ETLs, where related processes are executed as a mini workflow, in favour if fully independent execution of the individual (modular) ETL processes.

I have spend some time developing this concept in SQL Server using my VEDW Data Vault sample set and wanted to share some techniques how to achieve this.

It works even better than expected, if you have a SQL Server environment it’s worth checking out the code below. But the concepts are applicable for various architectures of course.

As a brief reminder, the ‘eventual consistency’ approach aims to remove all dependencies in loading data by allowing each individual ETL process (i.e. Hub, Link, Satellite) to run whenever possible – in a queue of sorts.

Enabling this requires a pattern change, for instance in the Data Vault example used here the ETLs needed to be updated (=regenerated) to load from the Persistent Staging Area (PSA) using their own self-managed load window. This load window is set for every unique execution based on the committed data in the PSA (based on the ETL control framework, not the RDBMS).

The load windows between individual ETLs differ slightly as each ETL process runs at slightly different time, but this is no problem since you can derive the last moment consistency (Referential Integrity) was achieved using the control framework metadata. This information can be used for loading data into the next layer.

The setup – two continuous queues

What I want to achieve for this test is to have two separate ‘queues’ running continuously:

- One queue that loads new data (delta) into the Staging / Persistent Staging Area, and

- One that loads data into the Data Vault from the Persistent Staging Area.

As outlined above there are no dependencies managed between processes – the ETLs are required to ‘just work’ every time they are executed.

To up the ante a bit more, I want the first queue (PSA) to run more frequently and using more resources than the Data Vault queue.

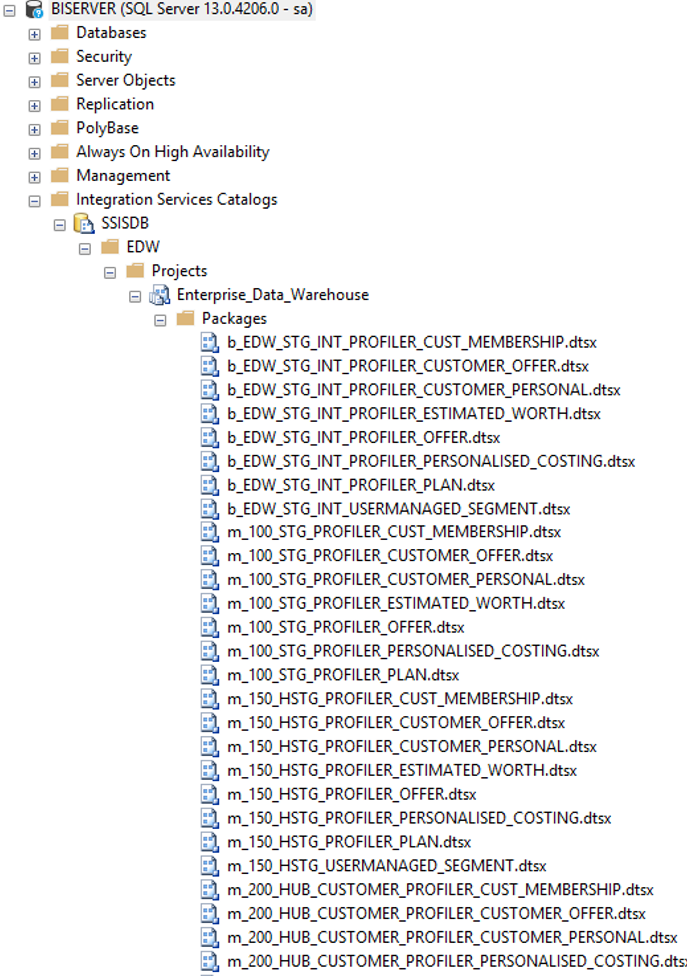

I have tested this concept using SQL Server and SQL Server Integration Services (SSIS), and have generated the VEDW sample set as physical SSIS ETL packages (using Biml, of course). The generated packages have been moved (deployed) to an Integration Services Catalog (the default SSISDB was used). The result looks like this:

This screenshot shows a subset of the deployed packages in the SSIS catalog. In the ETL control framework I use (DIRECT) the workflows start with ‘b_’ (for ‘batch’) and the individual ETLs start with ‘m_’. (for ‘modular’). For all intents and purposes I have generated the ‘batch’ ones to run the Staging and Persistent Staging steps in one go, but arguably you can load into the PSA directly.

To keep things simple I will create one queue for the ‘batch’ packages (containing Staging and Persistent Staging), and another queue for all the Data Vault objects (prefixed by ‘m_200’).

Setting up a continuously running process queue for SSIS

Running something continuously, over and over again – almost as an ETL service, in SSIS isn’t straightforward. There is no out of the box option for this, but there is a trick to make this work (unless you want to invest in the message broker). An easy way to achieve this is to create a job in SQL Server Agent containing a single T-SQL step. The job can be configured to start when the server starts, it will keep running regardless as soon as you start it.

The T-SQL step is where the real process handling takes place and where the polling is implemented. The idea is to create an endless loop that:

- Checks if new ETLs can start based on parameters, and wait if this is not the case. The number of concurrent ETL executions is the parameter used in this example. If this is less than 3, the next ETL can be started according to a priority list (the queue). If there are already 3 (or more) ETLs running there will be a 30 second wait before new attempts are made.

- Execute the SSIS package that is next in line directly from the SSIS catalog using the corresponding T-SQL commands. The priority order is set by the queue, but in this example organised by last execution time. The jobs that haven’t been running for the longest will be prioritised in the queue.

- Handles exceptions to avoid the SQL statement to fail. This is implemented using a TRY…CATCH block that deactivates the ETLs in the control framework if there is an issue so they won’t be attempted again unless reactivated. An example use-case is when the queue attempts to execute a package which is not available in the package catalog (hasn’t been deployed).

Thanks Luciano Machado for the WHILE 1=1 idea!

The logic is as follows:

-- Create a temporary procedure to act as parameter input, i.e. calculate the number of active ETLs

CREATE PROCEDURE #runningJobs @NUM_JOBS int OUTPUT AS

(

SELECT @NUM_JOBS = (SELECT COUNT(*) FROM <ETL control framework> WHERE <execution status is 'running'>)

)

GO

DECLARE @MAX_CONCURRENCY INT

DECLARE @NUM_RUNNING_JOBS INT

DECLARE @DELAY_TIME VARCHAR(8)

DECLARE @JOBNAME as VARCHAR(256)

DECLARE @CURRENT_TIME VARCHAR(19)

SELECT @MAX_CONCURRENCY = 3

SELECT @DELAY_TIME ='00:00:30' -- This is the time the queue waits upon detecting concurrency

WHILE 1 = 1

BEGIN

EXEC #runningJobs @NUM_RUNNING_JOBS OUTPUT

--Whenever the number of jobs exceeds the parameter, wait for a bit (as per the delay time)

WHILE (@NUM_RUNNING_JOBS >= @MAX_CONCURRENCY)

BEGIN

WAITFOR DELAY @DELAY_TIME

EXEC #runningJobs @NUM_RUNNING_JOBS OUTPUT

END

-- When a spot becomes available, run the next ETL(s) from the queue

SELECT TOP 1 @JOBNAME = ETL_PROCESS_NAME

FROM

( -- Select the Module that hasn't run the longest (oldest age)

SELECT *

FROM <the queue>

) QUEUE

ORDER BY <latest execution datetime> ASC

BEGIN TRY -- Execute the ETL

Declare @execution_id bigint

EXEC [SSISDB].[catalog].[create_execution] @package_name=@JOBNAME, @execution_id=@execution_id OUTPUT, @folder_name=N'EDW', @project_name=N'Enterprise_Data_Warehouse', @use32bitruntime=False, @reference_id=Null

Select @execution_id

DECLARE @var0 smallint = 1

EXEC [SSISDB].[catalog].[set_execution_parameter_value] @execution_id, @object_type=50, @parameter_name=N'LOGGING_LEVEL', @parameter_value=@var0

EXEC [SSISDB].[catalog].[start_execution] @execution_id

END TRY

BEGIN CATCH

<do something i.e. disable ETL in queue, send email etc.>

END CATCH

WAITFOR DELAY '00:00:05' -- A delayer to throttle execution. A minimum delay (1 second) is required to allow the systems to administer ETL status properly.

END

DROP PROCEDURE #runningJobs

When this is started, either directly as SQL statement or as part of a SQL Agent Job (T-SQL step) the process will keep on running until stopped.

Organising the queue

What about the queue itself? In its simplest form this can be a view that lists out the ETL packages that need to be executed, as long as the name corresponds with the name of the object in the SSIS catalog. At the very least the .dtsx suffix needs to be added as this is how package files are stored in SSIS.

The view I have used for this queries the ETL object names from the ETL control framework, as they need to be declared anyway for the control wrapper to work. In other words, the ETL names are already there. All I need is to select the most recent execution instance for each ETL I want to be in scope, so this can be listed in ascending order. This will force the ETL process that hasn’t run the longest will be put on top of the queue.

It becomes really easy to set up various queues as all it takes is a T-SQL statement and corresponding view (or other object). Creating the 2nd queue was a matter of seconds and in similar fashion a 3rd Data Mart queue can be configured.

The result

When executing the queues you can see the ETL process executions happening in the ETL control framework. I specifically started the Data Vault queue first to confirm no data would be loaded, which makes sense because the PSA was still empty. After a few minutes I started the Staging / Persistent Staging queue, and one by one (three at a time really due to the concurrency setting) the PSA tables were being populated. At the same time the Data Vault queue processes started picking up the delta, as soon as the PSA process for a specific table was completed succesfully.

With the queue being a view you can monitor the order change while processes are executed. An ETL process that was top off the list moves back to the bottom, and slowly makes its way back up again, as shown in the following screenshot:

It all works really well and after a while Referential Integrity was achieved. Also, the results were 100% the same as they were in the VEDW and Batch approaches. Making changes in the data were also picked up and propagated without any problems.

Final thoughts

The number of currently executing ETLs as used in the example here is a fairly crude mechanism. But, it is clear to see that this can easily be adjusted to more sophisticated resource management parameters such as CPU or memory usage. While I didn’t implement this for the example here, a queue should also have ways to validate completeness of ETL processes. This is relevant because previously the internal dependencies where safeguarded in the batch style mini workflows, but since the batch is gone you need other ways to make sure all required ETL processes are present.

The easiest way to apply checks like these is to validate if every Link or Satellite has corresponding Hubs relative to the (shared) source table. The same applies to Link-Satellites of course, which needs its supporting Link and it’s Hub ETLs to be present somewhere in the queue. You need to prevent having, say, a Satellite that is loaded from a specific source table without a Hub process that loads from the same table. This is nothing new – the same rules apply and the required metadata is already available. It’s just that enforcing these rules is slightly different in a queue.

‘The queue’ is a good concept and works really well. If you have a PSA (why wouldn’t you?) you may want to give it a go as the results surpassed my expectation.

As a nice side effect, it also makes re-inialisation super easy. All you need to truncate your control table (or at least the relevant records) and the system does the rest to reload deterministically. Copying data is not needed anymore, and you can’t even make a mistake here because the patterns can re-process already loaded data without failure.

On top of this it also natively handles graceful completion of the ETL control framework wrapper because stopping the job doesn’t kill the SSIS package execution, it just prevents new processes from spawning. This means you can even put it on a schedule if you want the queue to operate only limited amounts of time. Win-win!

This is an example of how ‘eventual consistency’ can be implemented using SQL Server, and I recommend looking into it.

A quick question regarding your current implementation of the queue, how do you go about updating end date of package that was executed?

Also based on the query: SELECT @NUM_JOBS = (SELECT COUNT(*) FROM WHERE ) – how would you distinguish a package that was executed from a queue vs a package that was kicked off by a scheduled job?

Hi Tommy, the end-date of the package execution (finish date/time) is handled via the DIRECT controls that are part of every individual ETL. Have a look at the DIRECT control framework on the Github (send me your Github account)! Basically every package in this case has an event handler that writes the time to the repository when the package finishes one way or another. All logic is handled in the DIRECT wrapper / APIs to make each ETL stand alone. The queue is only the orchestration engine.

Because each package is stand-alone in the sense that it can be executed in any time and any order there is no real difference in packages being kicked-off via the queue daemon or via manual execution in Visual Studio or the package catalog. I don’t record which scheduled executed the package as such, although it can be captured in the DIRECT repository I suppose.

I’m writing some more on this in a new paper that covers Data Mart delivery, which I’m intending to publish this week.

I’m a massive fan of this mechanism. Together with eventual consistency and DIRECT it is a very powerful way to manage the DWH loads.