A gentle introduction to bitemporal data challenges

Your data is wrong! No, but we’re just looking at it from different points in time.

A flexible data solution, such as a data warehouse, has the capability to look at data from different perspectives.

One of these perspectives is ‘bitemporal’, which means looking at data from different vantage points in time. It is one of the more interesting challenges many organisation face when it comes to working with data.

Data is created everywhere in the organisation, and the data solution collects this data for analysis and reporting. When it comes to data, the data solution is somewhat of a ‘corporate memory’. Even when the operational systems and processes that originally created the data do not have it anymore, the data solution still remembers (compliance permitting).

And sometimes, this might just save your career.

Meet Bob. Bob here is tasked with providing reporting for the business.

This time around, management asks him for the end-of-month sales report for January. Since he is really keen to make a good impression, he plans to stay up until just past midnight to send the results as soon as possible.

So, he sets the alarm to just before midnight, splashes some water in his face, and boots up the computer. As soon as midnight hits, he checks if all data has been loaded correctly into the data solution and that everything he needs is available.

Just to be sure. One can’t be too careful. This is important.

When he sees that all data has been loaded, he hits the appropriate button and produces the Business Intelligence report.

This report queries the necessary data from the data solution, sums it all up, and formats it in a nice way.

It’s a great result – 100 units! Management sure must be happy.

Fast-forward to one week later…

Uh oh, this can’t be good. What’s happening here?

It turns out that Bob’s manager has run the same report, also for the same month of January. But, he’s getting different results.

Bob runs the same report again, for January. Sure enough, he’s getting something different as well. He now sees 80 units of sale. How can this be? What’s happening here?

He is so sure that he got 100 unit the first time. He even has a copy of the original report for evidence. But now, the system reports 80.

What happened here, which number is the right one?

Well, there is only one thing to do here, and that is dive into the data solution, and query the data to understand what has caused this behaviour.

The data solution is the only place where the history of transactions across different systems is maintained. Surely it is possible to get to the bottom of this.

So what does it do?

Thankfully, the data solution will track each individual transaction as it was at the point of time it was received. It’s like keeping an accounting balance.

So, if we sell 4 units on the first day of January (2023-01-01), the balance then will be 4 at that time. The balance is an interpretation of the transaction value up to that point in time.

On 2023-01-04, another 16 units are sold. This brings the balance to 20. Then, on 2023-01-22 a massive 60 units are reported – resulting in a balance of 80.

Last, but not least, on 2023-01-26, a final 20 units are sold. There are no further transactions for the rest of the month, so the closing balance is 100.

So far, so good.

But then, something else happens. On 2023-02-02, a correction comes in – rather late – because somebody didn’t fill in the correct figures. It turns out that the first two sales (on 2023-01-01 and 2023-01-04) were not correct, and required to be adjusted.

To fix this, the sales representative added an adjustment as a separate transaction dated just after the sales, to 2023-01-10. He just forgot to commit this transaction, and only realised this after month-end.

As a result, the transaction is created on 2023-02-02 and this is when it is received by the data solution.

A ‘back-dated adjustment’ or ‘late arriving record’ can appear in many forms, and which form exactly depends on how the system works, what is allowed and what kind of interface is used to determine the changes in data.

In this case, a separate transaction was created in the operational (sales) system for the offset value (-20). But, this change could also have appeared as an update to the original record, for example when the original values for the 2023-01-01 and 2023-01-04 records would have been updated to have a 0 value.

In this scenario, the operational system might not even have the original values anymore but just show the current state. The interface to the data solution would detect this as a data change (record) with the new value, and the data solution could still show the old and updated values as per when they were received in a transactional view as shown here.

Back to Bob – so, if the data would have been received on the right time, the overview would have looked like this!

If the transaction containing the -20 correct would have been received on 2023-01-10, as intended, the balance would have worked out differently.

So, at least it’s explainable how these different results have been created. Back when Bob ran the report, the backdated adjustment / late-arriving data was not yet known so it makes sense he got the 100 sales figure as a result. And later, when the correction was known, the result would give 80.

How to we manage this?

This is something that data solutions do, it’s called providing a bitemporal view of data.

Using Assertion and State timelines

To illustrate this data behaviour, I would like to introduce the terms assertion timeline and state timeline.

The assertion timeline represents the time when data (record, object) became known to the data solution, when the data was received. It is a technical timeline, managed by the data solution, that shows the order of arrival (inscription) in the solution. In the physical model, this is represented by the inscription timestamp, the moment the data first reached the data solution environment.

Different methodologies use different terms for this. In Data Vault, for example, this is the load date/time stamp (LDTS).

The data solution is in complete control of the assertion timeline, because it is the solution itself that issues the inscription timestamp – the moment of arrival. Therefore, it is always sequential and immutable.

In addition to the assertion timeline, the technical timelines that is controlled by the data solution, a data solution can also define a state timeline.

This is the real-world timeline that is the data solution is not in direct control of. It is the interpretation of a ‘business’ or ‘functional’ timeline based on data available in the systems that provide the data to the solution.

A good way to look at this, at least for me, is to think of the state timeline as what users of the systems would expect to see if you use their systems for their day-to-day activities. If they open a screen or run a report in their system, what would they see? What data would be valid from this perspective?

The definition of what the state timeline is, or is called, also varies between methodologies. A practical approach is to define a standard attribute for this to which the appropriate date/time values from the originating systems are mapped to.

For many reasons this is often, almost always, different from the assertion timeline – the time that data arrives in the system. For a data solution to be able to unambiguously report on what really happened, you need to combine these two perspectives on time.

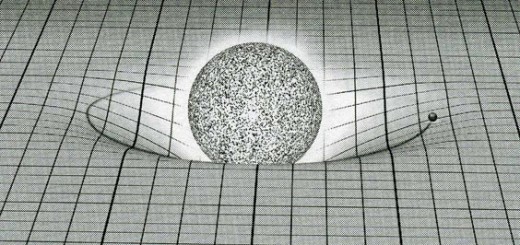

To visualise this, the assertion timeline and state timeline can be mapped to a cartesian plane such as shown below.

This way of visualising time has been copied from Dirk Lerner’s materials on temporal modelling (with permission). He has many more examples on different ways of looking at time and offers detailed temporal training on this, so if this is of interest please have a look at https://tedamoh.com/en/academy/training/temporal-data.

Given the vertical assertion timeline axis and the horizontal state timeline axis, we can plot the transactions on the plane.

When we follow the transactions, and map these to the plane, a pattern of data changes emerges.

On the cartesian plane, we can traverse the y-axis to go back-and-forth in time following the asserting timeline. Traversing the x-axis left and right travels through time following the state timeline.

Using this approach we can pinpoint exactly what the state of data was at a given point in time on this two-dimensional plane. In the image below, the horizontal red line intersects the assertion timeline and represents which data was available at the point of intersection. The same applies for the vertical line, but from the state timeline’s perspective.

This is what the state of data was when Bob ran the report just after midnight at the end of the month.

Since the transaction on the 2nd of February hasn’t happened yet, the balance at this point in time is 100.

To replicate this state from a later point in time, when later transactions are already available (as we are doing now), we traverse down / back across the assertion timeline using the horizontal line to the exact same spot.

The horizontal red line intersects the assertion timeline before 2023-02-02, indicating that this transaction hasn’t happened yet.

We have travelled back in time along the assertion timeline, to where the line crosses four ‘blocks’, representing four transactions.

And this is what happens when Bob’s manager (as well as Bob later) runs the report the week later.

Now, the assertion timeline ‘hits’ five blocks / records, which includes the back-dated adjustment.

Bob’s worries are over.

Bob now knows how to run ‘as of’ queries against the data, and armed with this knowledge he can prove that the data itself is not ‘wrong’.

He can always reproduce the values exactly as they were at a point in time in the past, and now can even offer the business different perspectives of using the data.

The data becomes an asset to explain what really happens in the business. This provides a great discussion item on how to deal with this, so that the business as a whole can align on data interpretation.

And, equally important, he understands he doesn’t need to set the clock at midnight again because he can always represent this point in time from anywhere (anywhen?) in the future.

The characters / avatars used in this post are graciously provided by Peter Avenant, who has a real knack for design.