Generate Data Vault Hub load processes easily using Handlebars

Generating ETL processes to load Data Vault Hub tables using the Handlebars templating engine is the topic of this 3rd post in the simple ETL generation series.

In the first post of this series we have looked into the basic Hub ETL loading pattern as well as the associated automation process flow. We used plain SQL to automate development of the loading processes using (TEAM generated) metadata. The second post explored alternative ways generate code, by providing an introduction in code templating engines in general and Handlebars in particular.

Now, we can combine the two and use the Handlebars templating engine to generate Data Vault Hub ETLs based on SQL. First up, we will replicate the output of the SQL cursor using Handlebars but later on in this post we’ll start to see the power and flexibility that this approach provides.

To do this, first we make sure we have defined the class that contains the metadata. After this we define the template itself and merge the two.

Connecting to the metadata

Compared to the code from the previous examples we’re making a few changes to allow us to move away from the Yaml metadata input file and connect straight to a database. This is similar to how we operated the cursor in the SQL-only example.

Technically speaking, we need to define a class / object model to capture the metadata from the database. We can reuse the same metadata that we used for the SQL example – sourced from the TEAM INTERFACE_SOURCE_HUB_XREF view (and provided as DDL and DML here).

The metadata requirements for generating the base Data Vault Hub pattern are fairly straightforward. As a reminder, in principle the only elements we need are:

- <targetTable>; the name of the target Hub table

- <targetBusinessKey>; the name of the the target Hub business key attribute

- <sourceTable>; the name of the source table

- <sourceBusinessKey>; the definition (attributes) of the business key as it is known in the source table

In the upcoming posts in this series we will add support for additional use-cases such as composite and concatenated keys, pivoting and hard-coded values – the edge-cases for Hub ETLs. This will require a few extensions, but first let’s continue to set up the generation framework.

The class to store the above metadata in code can look like this:

using System.Collections.Generic;

class MappingList

{

public List<IndividualMetadataMapping> metadataMapping { get; set; }

}

class IndividualMetadataMapping

{

public string businessKeySource { get; set; }

public string businessKeyTarget { get; set; }

public string hubTable { get; set; }

public string hubTableHashKey { get; set; }

public string sourceTable { get; set; }

}

This is even simpler than the (initial) examples from the previous post. All we need to do is instantiate a ‘MappingList’, which creates a list (object) containing ‘IndividualMetadataMappings’ called ‘metadataMapping’.

Note that there is a derived object in the class called hubTableHashKey. This is a value derived from the hubTable using a convention. More on this later.

In other words, we have a collection of individual mappings that is stored in a single list – akin to the recordset provided by the metadata from the database table.

Loading the metadata into the class

Connecting to the database and populating the object model with the metadata is the most complex part of the solution. With the Yaml example we could use the file structure and headings to directly load (deserialise) the contents into the defined class. But using the database as a source we need to take a couple of additional steps.

The first step is to retrieve the data and store this into a C# DataTable object, basically an in-memory table. I have used a custom method (routine, function) to fill the DataTable with the content from the database. This method is available for download further down in this post as part of the complete code for those interested

// Retrieve metadata and store in a data table object

var queryHubGen = @"SELECT

[SOURCE_NAME]

,[SOURCE_BUSINESS_KEY_DEFINITION]

,[HUB_NAME]

,[HUB_BUSINESS_KEY_DEFINITION]

FROM [interface].[INTERFACE_SOURCE_HUB_XREF]

WHERE [HUB_NAME] IN ('HUB_CUSTOMER',

'HUB_INCENTIVE_OFFER')";

// Connect to the database and fill the DataTable

var sqlConn = new SqlConnection

{

ConnectionString = "Server = <>; Initial Catalog = <>; user id = <>; password = <>; "

};

var hubTables = Generic.GetDataTable(ref sqlConn, queryHubGen);

By executing this code the metadata is now made available in an internal C# object which we can easily manipulate and use. It’s time to instantiate the class we defined earlier, so we can add the metadata to this instance.

To achieve this, we iterate through the DataTable and add the rows with the elements (columns) to the class instance as a C# List object. I have used a LINQ expression for this, but there are various other ways you can achieve this.

In any case, you essentially create a List of the type ‘IndividualMetadataMapping’ and add it to the ‘listing’ variable – which is the instance of the MappingList class.

// Create an instance of the 'MappingList' class / object model

MappingList listing = new MappingList();

// Move the data table to a List object

List mappings = hubTables.AsEnumerable().Select(row =>

new IndividualMetadataMapping

{

hubTable = (string)row["HUB_NAME"],

hubTableHashKey = row["HUB_NAME"].ToString().Replace("HUB_","")+"_HSH",

sourceTable = (string)row["SOURCE_NAME"],

businessKeySource = (string)row["SOURCE_BUSINESS_KEY_DEFINITION"],

businessKeyTarget = (string)row["HUB_BUSINESS_KEY_DEFINITION"]

}).ToList();

// Add the list of individual mappings to the class instance

listing.metadataMapping = mappings;

This is where the convention to derive the name of the Hash Key from the Hub table comes in. While collecting the metadata the value for the Hub table name is used to derive the name of the (target) Hash Key by removing the ‘HUB_’ prefix and adding a ‘_HSH’ suffix.

We now have the metadata ready to go, so let’s define the template we want to map this against.

The Hub template in Handlebars

Similar to the previous Handlebars example we’re using a separate file to store the template itself. This makes it easy to maintain and alter, and we can add as many different templates as we like.

The template itself is the same as was used in the SQL example from the first post in this series. We’re trying to replicate what was done using the SQL cursor. The pattern therefore looks, unsurprisingly, very similar.

{{#each metadataMapping }}

-- Working on mapping to {{hubTable}} from source table {{sourceTable}}

INSERT INTO DVI_200_Integration_Layer.dbo.{{hubTable}}

(

{{hubTableHashKey}},

{{businessKeyTarget}},

LOAD_DATETIME,

ETL_INSERT_RUN_ID,

RECORD_SOURCE

)

SELECT

HASHBYTES('MD5',

ISNULL(RTRIM(CONVERT(NVARCHAR(100), {{businessKeySource}})), 'N/A') + '|'

) AS {{hubTableHashKey}},

{{businessKeySource}} AS {{businessKeyTarget}},

MIN(stg.LOAD_DATETIME) AS LOAD_DATETIME,

-1 AS ETL_INSERT_RUN_ID,

stg.RECORD_SOURCE

FROM {{sourceTable}} stg

LEFT OUTER JOIN DVI_200_Integration_Layer.dbo.{{hubTable}} hub ON stg.{{businessKeySource}} = hub.{{businessKeyTarget}}

WHERE stg.{{businessKeySource}} IS NOT NULL

AND hub.{{businessKeyTarget}} IS NOT NULL

GROUP BY

HASHBYTES('MD5',

ISNULL(RTRIM(CONVERT(NVARCHAR(100), stg.{{businessKeySource}})),'N/A') + '|'

),

stg.{{businessKeySource}},

stg.RECORD_SOURCE

{{/each}}

For each entry in the metadataMapping List the five defined variables are dynamically added to the template. In this example I haven’t used any parameters, but of course these can be either added to the class and template, or left as-is (hard-coded) in the template as a convention.

In both cases this is easy to modify at any time.

For completeness’ sake, a template file always needs to be compiled to be able to be merged with the context / content. This is done using the compile statement provided by Handlebars.Net.

// Load the file from disk into a variable

var stringTemplate = File.ReadAllText(AppDomain.CurrentDomain.BaseDirectory + @"....\HubTemplate_Original.handlebars");

// Compile the template

var template = Handlebars.Compile(stringTemplate);

Generating the results

Merging the metadata with the compiled template is extremely straightforward. The code displayed below presents the generated SQL – all individual Hub ETL loading processes in SQL – back to the user.

// Combine the template with the metadata, and capture this in a variable

var result = template(listing);

// Return the result to the user

Console.WriteLine(result);

Console.ReadKey();

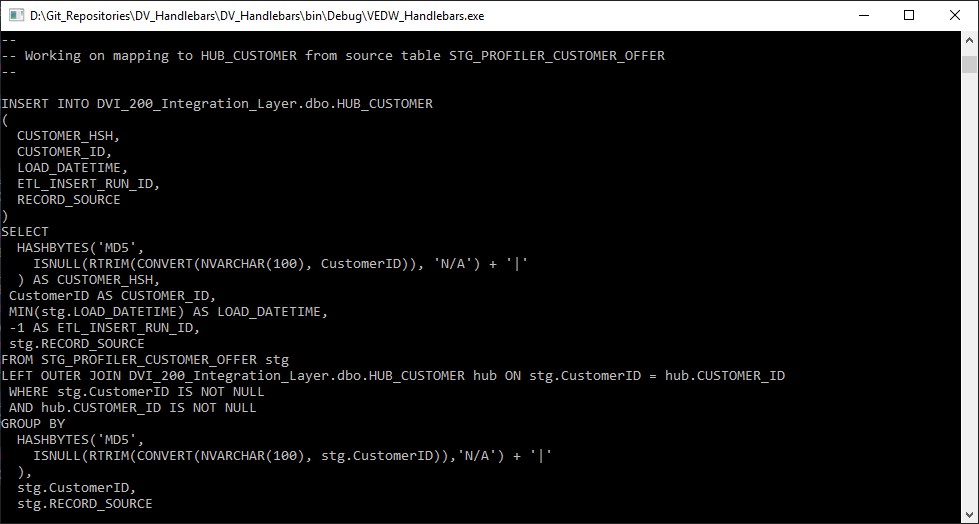

One of the output queries is shown below as an example. We now have automated the Data Vault Hub ETL processes.

Extreme flexibility

I received various emails with question and suggestions on the post that uses SQL (cursor) to generate Hubs, mainly about the pattern itself and potential improvements that could be considered.

One of the remarks was about the use of MIN(LOAD_DATETIME), and the fact that (because of this) the RECORD_SOURCE attribute is added to the GROUP BY statement.

This only works if each Staging Layer (i.e. Staging Area or Persistent Staging Area) table is dedicated, and therefore can only ever contain a single record source value. The convention that is used is that every individual file / table into the Staging Layer has its own unique table.

The pattern would not support shared Staging Layer tables, because there could be multiple different RECORD_SOURCE values in a single table.

To cater for this, a potential improvement is to sort the data using window functions (partition by the Business Key, order by the Load Date / Time Stamp) and select the first row. This is a more elegant way of retaining the minimum Load Date / Time Stamp for each key.

Another response was related to performance. It would be possible to perform the key lookup earlier, and consequently remove the Business Keys that already exists in the Hub before applying the hash function. This saves on some unnecessary CPU overhead.

Lastly, depending on the scenario / local setup a WHERE NOT EXISTS clause may perform better than a LEFT OUTER JOIN.

The majority of feedback was provided by Eckhard Zemp – many thanks!

Incorporating improvements like these is where the power of a templating engine really comes into play. The metadata doesn’t change, only the template does. All that is needed to apply these kinds of changes across all ETL processes is a few tweaks in the template file.

The Hub pattern revisited

When we take the above feedback into account, the revised Hub template looks as follows:

{{#each metadataMapping }}

-- Working on mapping to {{hubTable}} from source table {{sourceTable}}

INSERT INTO DVI_200_Integration_Layer.dbo.{{hubTable}}

(

{{hubTableHashKey}},

{{businessKeyTarget}},

LOAD_DATETIME,

ETL_INSERT_RUN_ID,

RECORD_SOURCE

)

SELECT

HASHBYTES('MD5',

ISNULL(RTRIM(CONVERT(NVARCHAR(100), {{businessKeyTarget}})), 'N/A') + '|'

) AS {{hubTableHashKey}},

{{businessKeyTarget}},

LOAD_DATETIME,

ETL_INSERT_RUN_ID,

RECORD_SOURCE

FROM

(

SELECT

sub.*,

ROW_NUMBER() OVER (PARTITION BY {{businessKeyTarget}} ORDER BY LOAD_DATETIME ASC) AS LDTS_ORDER

FROM

(

SELECT

stg.{{businessKeySource}} as {{businessKeyTarget}},

stg.LOAD_DATETIME,

-1 AS ETL_INSERT_RUN_ID,

stg.RECORD_SOURCE

FROM {{sourceTable}} stg

WHERE NOT EXISTS

(

SELECT 1

FROM DVI_200_Integration_Layer.dbo.{{hubTable}} hub

WHERE stg.{{businessKeySource}} = hub.{{businessKeyTarget}}

)

) sub

) supersub

WHERE LDTS_ORDER=1

{{/each}}

In this template we have removed the aggregation (MIN) and corresponding group by in favour of a ROW_NUMBER() function. We also moved the key lookup to the inner query and swapped the LEFT OUTER JOIN for a WHERE NOT EXISTS statement. Easy!

When we run this template, the output is presented accordingly:

There has been no change to the code, and the metadata is still the same.

By tweaking the template file to accommodate new ideas we can continuously keep up with progressive insights and make the modifications that work best in our own specific technical environment.

And the best part: when you move to other technologies or platforms you can take the metadata with you – your real Intellectual Property. It’s only the syntax in the pattern that needs a review.

For those interested; the code for these examples can be downloaded below. Both template files have been added, they are called ‘original’ and ‘modified’ and can be (un)commented in the code if required.

Please rename the .txt file to .rar; I had some issues uploading the code so renamed the archive to .txt.